This page constitutes my required external learning portfolio for CS 7495, Computer Vision, taken in Fall 2011. In it, I discuss what I have learned throughout the course, my activities and findings, how I think I did, and what impact it had on me.

About me

I am a coffee fanatic that is into computers, photography, computer vision, computational photography, and electronics. I am currently working toward a Master’s Degree in Computer Science at Georgia Tech. My passion is in using GPUs for computer vision, and I’m also a part-time software engineer at AccelerEyes (the people who make Jacket: the GPU plug-in for Matlab).

Foundation: Reading and Assignments

In the course, we completed several assignments on the foundations of computer vision, after reading the relevant material in the textbook.

- The homework assignments covered topics in geometry, signal-processing, multiple images, segmentation, tracking, and object detection. Different algorithms were covered in each one, such as least-squares fitting, convolution filters, disparity and depth, graphcuts, kalman filtering, and support vector machines.

- My favorite assignment was the one covering SVMs. After this assignment, I had a better understanding of how support vector machines worked, and for what applications SVMs are useful for.

Skills: Mini-projects

There were three mini-projects in which I chose to research a problem that was supposed to be relevant to my future career. For each of these three projects, I proposed a solution, implemented it, and described it in a mini-conference paper.

(collaborator: Brian Hrolenok)

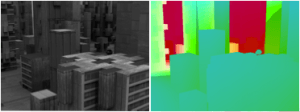

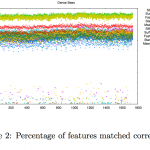

Project 1: Measuring Feature Stability in Video

Our purpose in this project was to propose and evaluate a set of metrics for comparing various combinations of OpenCV’s feature descriptors/detectors pairs on domain specific video (ants and bees). Of the metrics used, the one in which most clearly illustrates feature performance is the percentage of correctly tracked features. We learned that examining the frequency of incorrect assignments is more useful than the level of incorrectness when comparing these feature detectors/descriptors, and also that the choice of detector has more of an impact on performance than the choice of descriptor. We saw in our tests that the SURF detector consistently ranked well on each metric, making it the most robust detector we tested. We also got a nice intuition about how each detector and descriptor works under the hood while watching them all in a controlled manner.

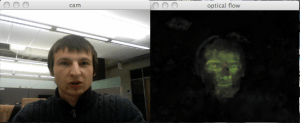

Project 2: GPU TV-L1 Optical Flow

In this project, we implement and compare two different optical flow algorithms on the GPU using OpenCL: Horn-Schunck and TV-L1 (L1 norm Total Variation) optical flow. The Horn-Schunck method is relatively simple and fast, but produces larger single pixel error and cannot capture large motions very well. For TV-L1, flow is computed on coarse sub-sampled images with and optimization formulation of the problem, and then propagated as an initial estimate for the next pyramid level for iterative solving. Increasing the number of pyramid levels is more performance costly than increasing the number of inner iterations, as pyramids involve more memory transfer overhead. While the alternative formulation of the L1-optimization problem leads to a more robust solution, probably the most important improvements the TV-L1 approach has over standard Horn-Schunck are the inclusion of an image pyramid to handle larger scale motion, and the median filter to remove outliers.

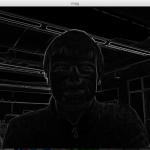

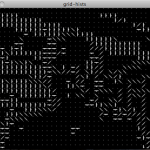

Project 3: HOG Features on the GPU

Our original premise for this project was that we could efficiently compute HOG features using the GPU, and that using HOG features would improve classification performance. Unfortunately we were only able to show the former in this project. Our implementation of HOG feature descriptor runs in real time: 0.06 seconds to compute a HOG feature with 16 × 16 cells and 8 bins per histogram for a 640 × 480 frame on a consumer laptop. Our naive sliding window approach for classification was quite simple, but slower than we had hoped. Although we made a few attempts, in the given time frame we were unable to get the SVM to train properly on our limited dataset for detecting faces. A neat visualization was made for showing in real-time each cell’s dominant histogram orientation (the canonical HOG display) on a live webcam input.

Open Source!

Demo Video!

How we did:

Overall, each project presented us with unique challenges and opportunities to learn from. I feel my group and I performed well on every project – especially given the time constraints and that all of us had multiple other classes with other projects due. The most important lesson to learn about these projects was how to effectively plan and manage the given time period to complete the project. The first project was originally a “warm up”, but turned out to be quite involved and interesting. Of the three, the second project was by far the coolest paper to implement and demo. The last project was also fun and had an impressive demo to show (even though we didn’t get the extra step working).

Links to project papers:

- paper1 – Measuring Feature Stability in Video

- paper2 – GPU TV-L1 Optical Flow

- paper3 – HOG Features on the GPU

My favorite Project: GPU TV-L1 Optical Flow

Problem

Determining optical flow, the pattern of apparent motion of objects caused by the relative motion between observer and objects in the scene, is a fundamental problem in computer vision. Given two images, goal is to compute the 2D motion field – a projection of 3D velocities of surface points onto the imaging surface. Optical flow can be used in a wide range of higher level computer vision tasks, from object tracking and robot navigation to motion estimation and image stabilization.

There is a real need for shortening the required computational time of optical flow for use in practical applications such as robotics motion analysis and security systems. With the advances in utilizing GPUs for general computation, it’s become feasible to use more accurate (but computationally expensive) optical flow algorithms for these practical applications.

With that in mind, we propose to implement an improved L1-norm based total-variation optical flow (TV-L1) on the GPU.

Related Works

Graphical Processing Units (GPUs) were originally developed for fast rendering in computer graphics, but recently high level languages such as CUDA and OpenCL have enabled general-purpose GPU computation (GPGPU). This is useful In the field of computer vision and pattern recognition, and Mizukami2007 demonstrate this by implementing the Horn-Schunck method on GPUs using OpenCL.

Two well known variational approaches to computing the optical flow are the point based method, presented in Horn and Schunck (Horn1981), and the local patch based method of Lucas and Kanade Lucas1981. Our approach is an improvement of Horn1981 that replaces the squared measure of error with an L1 norm. The highly data-parallel nature of the solution lends itself well to a GPU implementation.

Approach

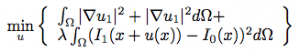

The standard Horn-Schunck algorithm minimizes the following energy equation  where the first integral is known as the regularization term, and the second as the data term. An iterative solution to the above optimization problem is given two equations in terms of the flow field u = (ux,uy), a weighted local average of the flow u`, gradient images Ix, Iy, and It in x, y and t respectively, and and alpha a small constant close to zero. These two equations come from solving the Lagrangian optimization equations associated with the energy function, and using a finite differences approximation of the Laplacian of the flow.

where the first integral is known as the regularization term, and the second as the data term. An iterative solution to the above optimization problem is given two equations in terms of the flow field u = (ux,uy), a weighted local average of the flow u`, gradient images Ix, Iy, and It in x, y and t respectively, and and alpha a small constant close to zero. These two equations come from solving the Lagrangian optimization equations associated with the energy function, and using a finite differences approximation of the Laplacian of the flow.

The TV-L1 approach optimizes the following equation ![]() which replaces the squared error term with an L1 norm. Wedel et al. propose an iterative solution that alternatively updates the flow and an auxiliary variable v

which replaces the squared error term with an L1 norm. Wedel et al. propose an iterative solution that alternatively updates the flow and an auxiliary variable v Where

Where ![]() is the current residual. On top of this alternative optimization, we implement a median filter on the flow field, and use image pyramids in a coarse-to-fine approach in order to handle larger scale motions. That is, flow is computed on coarse sub-sampled images, and then propagated as an initial estimate for the next pyramid level.

is the current residual. On top of this alternative optimization, we implement a median filter on the flow field, and use image pyramids in a coarse-to-fine approach in order to handle larger scale motions. That is, flow is computed on coarse sub-sampled images, and then propagated as an initial estimate for the next pyramid level.

In both algorithms, there are several points at which an iterative solution for the flow vector of an individual pixel in the image can be computed concurrently with its neighbors. This allows us to improve performance from a serial implementation by using OpenCL. In particular, we wrote OpenCL kernels to compute flow updates, image gradients, and local flow averages for Horn-Schunck, as well as kernels for u and v updates, and discrete forward and backward differences.

Side note: The first version of the TVL1 code from Matlab to C++ was ported with LibJacket ArrayFire, a high-level, Matlab-like, GPU matrix library. Porting code from Matlab to LibJacket was almost 1-to-1 easy. Good performance is obtained almost out-of-the-box using LibJacket, vs writing from scratch OpenCL/CUDA kernels for TV-L1.

Results

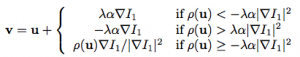

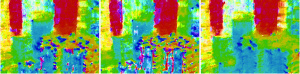

We ran both the Horn-Schunck and TV-L1 algorithms on a subset of the Middlebury dataset. We compute and list average and maximum endpoint error in pixels for both algorithms for each dataset. For this table, we ran Horn-Schunck for 60 iterations. More than 60 iterations showed minimal improvement. TV-L1 ran for 7 fixed point iterations, and used a pyramid of 7 images with a 0.9 scaling factor. The figures below illustrate the effect of the number of fixed point iterations and the size of the image pyramid used.

TV-L1 performs better than Horn-Schunck in almost all cases. The difference between the two methods is most clearly seen on the Urban2 setting, which has the largest apparent motion of the group. Since TV-L1 makes use of an image pyramid, we are able to capture larger motion, so this make sense. Our testing also shows that the Horn-Schunck method produces larger single pixel error, which makes sense because the TV-L1 method applies a median filter, and can throw out such outliers.

The parameters used in our tests give roughly the same number of iterations to each method: Horn-Schunck uses a fixed 60 iterations, and TV-L1 performs 7 fixed point iterations at each of 7 pyramid levels. By altering the ratio of fixed point iterations to pyramid levels, the performance of the algorithm on a particular dataset changes, given the same “budget” of iterations. By increasing the number of pyramid levels the algorithm can capture larger motions, while increasing fixed point iterations improves the flow estimates at each pyramid level. The figures above show this accuracy trade off. Performance wise, increasing the number of pyramid levels is more costly than increasing the number of inner iterations, as pyramids involve more memory transfer overhead.

What we learned

Looking back at the entire project, one important lesson learned is that while the alternative formulation of the optimization problem leads to a more robust solution, probably the most important improvements the TV-L1 approach has over standard Horn-Schunck are the inclusion of an image pyramid to handle larger scale motion, and the median filter to remove outliers.

The purpose of implementing these algorithms on the GPU was to achieve some performance gain over the standard serial version. Our first naive implementation of both TV-L1 and Horn-Schunck algorithms made use of global memory for all computations, which could certainly be improved in future work. An obvious future work item would be to optimize the naive OpenCL kernels and make use of shared/texture memory.

Our current implementation approaches real-time, running the Venus image at the settings mentioned previously at ~3fps. Additionally, we developed a separate implementation of TV-L1 using the LibJacket library — a high-level, Matlab-like, GPU matrix library — which improved the running time over a serial CPU implementation by orders of magnitude, and indeed nears real-time performance.

Why this was my favorite

So much new cool stuff on a topic I find interesting: learned a lot about how optical flow works, wrote my first program in OpenCL, first time implementing and using image pyramids, actually produced a ‘fast’ GPU optical flow demo…

Self-assessment

Going into each project was pretty much, “Hey, I have heard of this topic before, and I would like to know more, let’s try to do/implement X”, not knowing in the slightest how to actually do X. <extreme time-limited focus ensues>. Then comes paper review day (when each project is due), and have since dived deep into the selected project topic literature, implmeneted it, written about it, and have a neat demo ready to show off on presentation day. Every project taught me at least a few new things. After Project 1, I know which feature descriptor/detector pair in OpenCV is best suited for which tasks. After Project 2, I know how to program OpenCL, and have a better understanding and one of the top performing optical flow methods, running on the GPU. After Project 3, I have more OpenCL experience and have a deep understanding and real-time GPU implementation of the HOG feature descriptor. Getting to choose interesting topics made the projects fly by (hmm, or was it the ~2 week deadlines?).

I feel the most proud about the second project, mainly because we had a cool demo (GPU optical flow on my laptop), and I had never used OpenCL before and got to learn it fast and put it to good use. The first project was more of a warm-up, but still interesting and useful to know nonetheless. While we didn’t propose it, he last project would have been better if we could have gotten the SVM trained properly for face detection, for an even more impressive demo. All projects took a lot of focused effort in a small amount of time. Overall, I got quite a lot out of this course – from learning about topics from top professors in that field at GaTech, to getting intense hands-on project action on interesting topics.

Comments for the Instructors

What I particularly liked about the course:

I enjoyed the free-from, involved projects; having the flexibility to choose topics related to computer vision helped each group do their own thing they find interesting.

Final Comments

I’m glad that I signed up for this course. Even though most of the topics covered were not completely new to me, any gaps in the literature I had on any subject discussed were filled in, and I gained further insight and perspective into topics I was already familiar with.

I am working on an android-based computer vision application using opencv library. Got stuck in linking up the two. Any hints to make it work to extract functions from the opencv library based on an image/video in android platform? Thanks

Chuah:

I have an open source project here that is based on the old ‘android-opencv’ that you may find helpful.

Dear…

I am working also in the same field of interesting…Nowadays I am trying to develop the block-based matching technique for motion detection…do you have and Matlab code for this technique(diamond search,3SS,4SS) or know any link could be usful