As a final assignment/write-up for my CS6601 Artificial Intelligence class at Georgia Tech, this learning portfolio was made to summarize what I had learned throughout the course…

CS 6601 Learning Portfolio

This page constitutes my learning portfolio for CS 6601, Artificial Intelligence, taken in Spring 2011. In it, I discuss what I have learned throughout the course, my activities and findings, how I think I did, and what impact it had on me.

About me

- I am a coffee/caffeine addict that is into computers, programming, photography, computer vision, electronics, and BMX. I am currently working toward a Master’s Degree in Computer Science at Georgia Tech. My passion is in computer vision, and I’m also a part-time software engineer at AccelerEyes (the people who make Jacket: the GPU plug-in for Matlab).

- My Website

Foundation: Reading and Assignments

In the course, we completed 7 assignments on the foundations of AI, after reading the relevant material in the textbook.

The assignments fell under 5 categories, each with certain topics covered:

- Problem Solving

- Search, A*, D*, CSP, SAT, Graph Coloring

- Knowledge, Reasoning and Planning

- Logic, CNF, Resolution, RRT

- Uncertain Knowledge and Reasoning

- Bayes Networks, Elimination, Factor Graphs

- Learning

- Decision trees, L1 L2 Normalization, KNN

- Communicating, Perceiving and Acting

- Natural Language Processing, N-grams

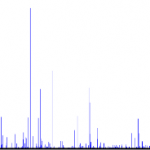

My favorite assignment was the last one dealing with Natural Language Processing and N-grams. The task was to write a program in any language (I chose Python) that parsed a text corpus of 100K+ words, generate a histogram of 1-grams,2-grams,3-grams. Then,generate a new ~100 word text using each of the n-gram histograms, and see how much sense each one makes. I found this to be a neat hands-on way to learn about N-grams, NLP, and the surprising frequencies of words in English texts.

The following table lists some results from two works (120K+ words) by Lucius Annaeus Seneca:

| top 5 words | count | top 5 bigrams | count | top 5 trigrams | count |

|---|---|---|---|---|---|

| the | 6162 | it is | 781 | it is a | 123 |

| and | 4690 | of the | 543 | it is not | 86 |

| of | 4382 | in the | 504 | and it is | 75 |

| to | 3991 | to be | 417 | there is no | 74 |

| a | 3419 | of a | 345 | it is the | 58 |

Here is a zoomed out view of the full histogram of all single words

Overall, I think that I performed well on most the assignments. Not having much AI background, some of the assignments were challenging, but I found the textbook and class slides to be valuable resources. I struggled the most with assignments related to first order logic, as I do not find it that interesting. My favorite assignments were the ones that involved writing code. The assignments synchronized well with the related topics covered in class each week.

Skills: Mini-projects

There were three mini-projects in which I chose to research a problem that was supposed to be relevant to my your future career. For each of these three projects, I proposed a solution, implemented it, and described it in a mini-conference paper.

These mini-projects really made the learning the course material fun, by going through the “entire” process of researching a problem, writing proposals, implementing a solution, and producing a paper. Additionally, having peer-review sessions for the papers and proposals was also a fun learning experience. Getting to really focus on one problem at a time helped me get a much deeper understanding of particular areas of interest.

Projects:

- A* vs D*Lite

- A* vs RRT

- MCMC Image Segmentation

I gained significant knowledge from each focused mini-project, while at the same time learning about paper writing, peer editing and time management. The first project was the hardest, because everything about the process and assignment structure was new, so at first it was difficult to gauge how long things would take. I quickly learned about how to go about proposing/researching/writing in more efficient ways through each project. The last project was the most interesting to work on, because it was related to computer vision and image processing, which I’m a big fan of.

My favorite Project

My favorite project that I worked on was comparing A* search with Rapidly exploring Random Trees (RRT) for navigating in a 3D space, mainly because my partner and I created a cool 3D visualization simulator that displayed the results.

Problem

Traditionally, path planning algorithms solve problems in two-dimensional space. Some applications, such as autonomous aerial vehicle planning, require guidance in three-dimensions. Aerial vehicle navigation is a complex problem because it may involve standard movements, such as forward and backward, as well as navigation over and under obstacles in the environment.

Related Work

Randomized potential fields is a sampling based planner that uses random-walks to escape local minima, using a potential function that tries to estimate the cost from any state configuration to the goal [cite](LaValle 2006). The main drawback, however, was that the method involved many heuristic parameters that had to be adjusted for each problem [cite](LaValle 2006).

Expansive-space planners attempt to explore new space by probabilistically choosing new verticies from different predetermined neighborhoods [cite]. The main drawbacks are that the planner requires substantial problem-specific parameter tuning [cite](LaValle 2006).

A is an extension of Dijkstra’s algorithm that tries to reduce the total

number of states explored by incorporating a heuristic estimate of the cost to

get to the goal from a given state [cite](Russell 2010). A is not originally for motion planning or random, but can be adapted as such for interesting comparisons.

Our Approach

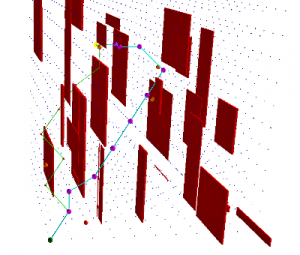

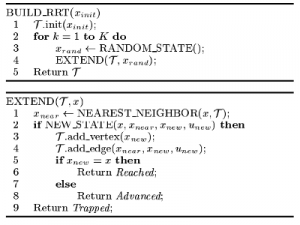

Rapidly-exploring Random Trees (RRTs) are introduced as a planning algorithm to quickly search high-dimensional spaces that have both algebraic constraints (obstacles) and differential constraints (nonholonomics and dynamics) [cite](LaValle 1998). The key idea is to bias exploration toward unexplored portions of the space by sampling from all points in the state space, and incrementally “pulling” the search tree toward them [cite] (LaValle 2001)

The basic RRT algorithm is given above. Each iteration of construction attempts to extend the RRT by adding a new vertex that is biased by a randomly-selected state x_{rand}. The EXTEND function selects the nearest vertex already in the RRT x_{near}, and applies a valid motion towards the randomly selected state input, creating a new node to be added to the RRT x_{new} [cite](LaValle 2001). This biases the RRT to rapidly explore the state space.

Results

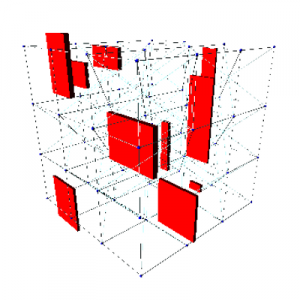

Rapidly exploring random trees and A* search were evaluated on randomly generated environments. A three dimensional grid of nodes and connections were created to form an undirected graph as shown in Figure 1. The connections in the graph were also given a uniform cost. The grid consisted of nodes that are spaced one hundred units apart arranged in a cube with an equal length, width, and height. The algorithms were tested on environments where the dimensions of the cube varied from 600 units to 1200 units. A number of variables and results were recorded, including: the number of nodes in the graph, the number of obstructions, the running time of both algorithms, the final path length of both algorithms, and the number of nodes that were expanded by both algorithms.

The random nature of RRTs generated interesting results with respect to A* search. In some cases, RRTs found a similar path as A*, but in about half of our simulations, RRTs found a path that is significantly longer. RRTs randomly choose a point that is some distance away from currently visited nodes and the algorithm then moves towards the point. This random movement continues until the goal node is reached. While A* is continually calculating the best path, RRTs are operating in a random fashion, which can produces sub-optimal results. In fact, it has been shown that as the number of samples increases, the RRT algorithm converges to an optimal solution with probability zero [cite](Karaman 2010).

Results also show that the run-time of RRT is on average slightly worse than A*, but still comparable. A longer run-time is expected due to the extra calculations and randomness in RRT. Sometimes, the run-time was over 100x slower than A*, probably due to a worst case ordering of sampling the state space. The majority of the time, both algorithms finished in well under 1 second.

In the experiments in this paper, only holonomic aerial vehicle planning was modeled. This was mainly for making the comparison to A* easier, as we found its difficult to model non-holonomic constraints with A*, but trivial with RRTs. In future work, more advanced vehicle modeling can be simulated, including vehicles with non-holonomic and kinodynamic constraints that can be compared to other motion planning algorithms.

My Take-Aways

This project allowed me to get a very firm grasp on how Rapidly-exploring Random Trees really work, and their applications – I had only a vague idea of RRTs and motion planning before this project. The coolest part of the project was watching both A* and RRT algorithms execute in the 3D visualization tool we created. The 3D view allowed insight to how each algorithm made choices along the way when planning, and also proved to be a useful debugging tool. I got to increase my Python skills when implementing RRT and the 3D visualization (in vpython).

Full Papers

Project 1 – Dynamic Route Re-planning

Project 2 – Aerial Vehicle Navigation Planning | Source Code

Project 3 – Image Segmentation via MCMC

Integration: Research Proposal

Towards the end of the course I was asked to write a more substantial research proposal that integrates AI into another discipline of my choosing.

Title

A Machine Learning Approach to Corner Detection

Problem

A common low-level operation in many computer vision systems is the detection

of “interesting'” parts, or features, within an image. Corners are robust, stable and well-defined image features, and correspond to points in the both the world and image spaces, making them important to many applications.

Corner detection is used as the first step of many vision tasks such as tracking, localization, SLAM (simultaneous localization and mapping), image matching, image stitching and object recognition [cite](Rosten 2008).

However, despite the massive increase in computing power since the inception of

corner detectors, it is still true that when processing live video streams at full

frame rate, especially on a mobile robot, existing feature detectors leave little if any time

for further processing [cite](Rosten 2008).

This paper proposes a unique approach to corner detection: combining a new

heuristic for feature detection with machine learning techniques to provide a robust, high-speed corner detection algorithm to use in real-time image processing applications [cite](Rosten 2008).

Related Works

SIFT: The Scale Invariant Feature Transform (SIFT) approach transforms an image into a large collection of local feature vectors, each of which is invariant to image translation, scaling, and rotation, and partially invariant to illumination changes and affine or 3D projection [cite](Lowe 1999). A Difference of Gaussian (DoG) kernel is computed at multiple scales (sizes) of the image to provide a stable scale-space technique [cite](Rosten 2006). SIFT features are very stable and robust to changes in illumination, rotation and scale of the features, and is widely used for image matching [cite](Lowe 1999). A disadvantage to SIFT is that it not affine-invariant, such as arbitrary viewpoint changes [cite](Rosten 2010).

Harris: The Harris corner detector functions by considering a local window in the image, and determining the average changes of image intensity that result from shifting the window by a small amount in various directions [cite](Harris 1988). This is done by defining a corner to have large eigenvalues in a given image patch [cite](Rosten 2006). One of the main advantages of the Harris operator is that it is very stable, and robust to noise cite. One of the main disadvantages is a manual, empirical parameter tuning for good performance [cite](Rosten 2006).

Proposed Approach

This paper proposes testing various machine learning algorithms on various feature descriptors, while striving for the goal of extremely fast processing time. Machine learning is used to help find optimal heuristics in a feature detection technique, such as the segment test, then directly implement in code the characteristics that are machine learned. It is desired that real-time image processing application, such as mobile robot navigation software, will benefit from using this approach.

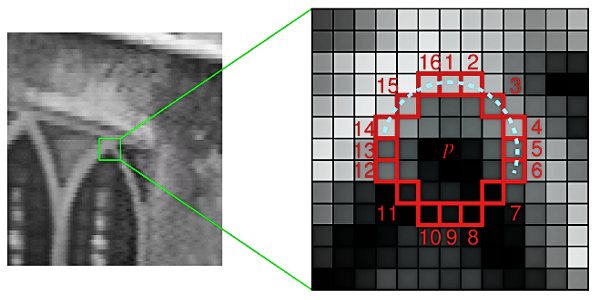

The segment test criterion operates by considering a neighborhood of pixels around corner candidates. A simple base detector classifies a corner if there exists a set of contiguous pixels in the region which are either all brighter or darker than the candidate pixel’s intensity [cite](Rosten 2006). This is a fast and simple test to be used as a starting point.

A machine learning solution is proposed here to help learn and tune better segment test heuristics automatically:

- The segment test as described above is run, detecting corners from a set of images, where every pixel is fully tested and classified. For each pixel in the neighborhood of corner candidates, a state is assigned based on whether the pixel is darker, similar, or brighter relative to the center [cite](Rosten 2006). This partitions the image into three subsets: darker, similar, brighter – based on a chosen neighborhood.

- The quality of a given corner candidate must be calculated. As a first step, the use of ID3 (Iterative Dichotomiser 3, related to information gain) is proposed for determining image entropy, but other methods and heuristics shall be researched as well. For a set of corners the entropy is calculated for the neighborhood and can represent the data in terms of the information gained from choosing each corner candidate [cite](Rosten 2006).

- Using a machine learning algorithm such as a decision tree, the correct formula for choosing corners for each neighborhood size based on whether pixels are darker, similar, or brighter, can be automatically learned. The decision tree learned here can then be translated into a “hard-coded” fast classifier routine for determining when a pixel region (from the segment test) is a corner or not. Other machine learning algorithms shall be tested as well.

This idea, of doing off-line machine learning on what makes a good corner as a preprocessing stage, is the basis for how an efficient and fast corner detector can be made.

The process just described will serve as the starting point for this project. Some questions this research will try to address are: What parameters to tune? What other learning algorithms can be used? What comparisons other than entropy are useful? Is there any other pre-processing or post-processing that should take place? What are the performance trade-offs for more elaborate feature descriptors? All of these questions and more will help guide the research. A major emphasis will be on finding the fastest possible combination of all these approaches.

Proposed Evaluation

The meaning of “corners” found in an image, however, depends on the context of the application and therefore do not necessarily correspond to physical corners in the scene cite, but rather feature points of interest. So when evaluating, “corners” may also simply refer to the found salient image features and interest points. It is necessary to define the requirements to an optimal interest operator. As criteria for a distinctive matching evaluation, the ideal candidate the characteristics proposed by cite provide good metrics for comparison:

Distinctness: unique in its neighborhood

Invariance: independent of the geometrical and radio-metrical distortions

Stability: robust to noise and blunders

Uniqueness: possess a global uniqueness

Interpretability: values should have a significant meaning for easier interpretation

Additionally, the performance of the detector under these criteria with respect to processing speed should be done as well, as one of the main goals of this project is to find the highest performing corner detection scheme without sacrificing the above mentioned qualities. It is believed this proposed solution will “shine” in the areas of speed, invariance, and stability by using the segment test, while the machine learning should help this proposed algorithm perform well in the areas of uniqueness, distinctness, and interpretability.

Proposed Research Plan

As with all major projects, it it crucial to know the goal and direction of the research, and keep track of where time is spent. The major milestones that should progress consist of:

- Feature detection algorithms research and development

- Related machine learning techniques research

- Algorithm prototyping and feature detection approach fusion

- Software prototyping and evaluation

- Software implementation and tuning

- A Final report

Resources for this project include related academic papers pertaining to machine learning and computer image processing algorithms. While there is no requirements on programming language specifics, a final code that is fast and reliable should be produced. Knowledge of code optimization techniques may serve beneficial as well.

While the final deliverable will be a very fast and quality corner detection code, another useful artifact of this proposal will be an extensive write-up of the trials and errors that may be encountered along the way. Much research will be done here to test the possibilities of combining machine learning techniques and feature detection, and any insights along the way will be noted and also be put in the final report for future reference.

Full Paper Proposal

A Machine Learning Approach to Corner Detection

Self-assessment

I am passionate about image processing, and the final proposal allowed me to explore some unfamiliar areas (feature detection) that interests me, while at the same time, relating everything back to AI. I did extensive reading on and learning about how FAST (Features from Accelerated Segment Test) works, and this was the main inspiration for the proposal. I definitely have a better understanding about FAST, SIFT and other feature detectors, and how there is much potential for machine learning to help in this area of computer vision.

Even though most of the time was spent reading related works and writing my ideas, I feel that I could have made the project a bit more aggressive. I think that I could improve the proposal by less writing on the initial steps, and more writing on more general directions of research. Although this is a fictional proposal, I felt it was a reasonable and project and able to be completed by a single person working on it for a few semesters.

Final Comments

I have always enjoyed project based courses, but this class took a completely different approach to project based courses than I have ever experienced. This method of teaching class material with lectures, focused projects, and papers [unknowingly at first] synced very well with my learning style. Besides allowing project topics to be flexible (keeps the work interest/motivation high), another good aspect of this setup was there no “traditional” format final (replaced by this page). I hope to have future classes structured in the same way!